Try to deploy YOLOv5 on coolpi

-

Introduction

YOLOv5 is the world's most loved vision AI, representing Ultralytics open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development.

FastDeploy is an open source and very easy to use warehouse, which can quickly deploy YOLOv5 on coolpi-4B.

Model Speed Table

In addition to YOLOv5, we also support the deployment of YOLOX, YOLOv7, and PPYOLOE. The following speeds are the end-to-end speeds of the model:

Model Name Whether to Quantify Speed(ms) Download Address yolov5-s-relu 是 70 https://bj.bcebos.com/paddlehub/fastdeploy/rknpu2/yolov5-s-relu.zip yolov7-tiny 是 58 https://bj.bcebos.com/paddlehub/fastdeploy/rknpu2/yolov7-tiny.zip yolox-s 是 130 https://bj.bcebos.com/paddlehub/fastdeploy/rknpu2/yolox-s.zip ppyoloe 是 141 https://bj.bcebos.com/paddlehub/fastdeploy/rknpu2/ppyoloe_plus_crn_s_80e_coco.zip C++ Demo

It is difficult to develop AI applications using C++, but it can be matched with other useful libraries on CoolPI-4B. For your convenience, we provide a demo of C++.

// Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved. // // Licensed under the Apache License, Version 2.0 (the "License"); // you may not use this file except in compliance with the License. // You may obtain a copy of the License at // // http://www.apache.org/licenses/LICENSE-2.0 // // Unless required by applicable law or agreed to in writing, software // distributed under the License is distributed on an "AS IS" BASIS, // WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. // See the License for the specific language governing permissions and // limitations under the License. #include "fastdeploy/vision.h" void RKNPU2Infer(const std::string& model_file, const std::string& image_file) { auto option = fastdeploy::RuntimeOption(); option.UseRKNPU2(); auto format = fastdeploy::ModelFormat::RKNN; auto model = fastdeploy::vision::detection::RKYOLOV5( model_file, option,format); auto im = cv::imread(image_file); fastdeploy::vision::DetectionResult res; fastdeploy::TimeCounter tc; tc.Start(); if (!model.Predict(im, &res)) { std::cerr << "Failed to predict." << std::endl; return; } auto vis_im = fastdeploy::vision::VisDetection(im, res,0.5); tc.End(); tc.PrintInfo("RKYOLOV5 in RKNN"); std::cout << res.Str() << std::endl; cv::imwrite("vis_result.jpg", vis_im); std::cout << "Visualized result saved in ./vis_result.jpg" << std::endl; } int main(int argc, char* argv[]) { if (argc < 3) { std::cout << "Usage: infer_demo path/to/model_dir path/to/image run_option, " "e.g ./infer_model ./picodet_model_dir ./test.jpeg" << std::endl; return -1; } RKNPU2Infer(argv[1], argv[2]); return 0; }Python Demo

In addition to using C++, you can also use FastDeploy's Python API for rapid development.

# Copyright (c) 2022 PaddlePaddle Authors. All Rights Reserved. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. import fastdeploy as fd import cv2 import os def parse_arguments(): import argparse import ast parser = argparse.ArgumentParser() parser.add_argument( "--model_file", required=True, help="Path of rknn model.") parser.add_argument( "--image", type=str, required=True, help="Path of test image file.") return parser.parse_args() if __name__ == "__main__": args = parse_arguments() model_file = args.model_file params_file = "" # 配置runtime,加载模型 runtime_option = fd.RuntimeOption() runtime_option.use_rknpu2() model = fd.vision.detection.RKYOLOV5( model_file, runtime_option=runtime_option, model_format=fd.ModelFormat.RKNN) # 预测图片分割结果 im = cv2.imread(args.image) result = model.predict(im) print(result) # 可视化结果 vis_im = fd.vision.vis_detection(im, result, score_threshold=0.5) cv2.imwrite("visualized_result.jpg", vis_im) print("Visualized result save in ./visualized_result.jpg")Result

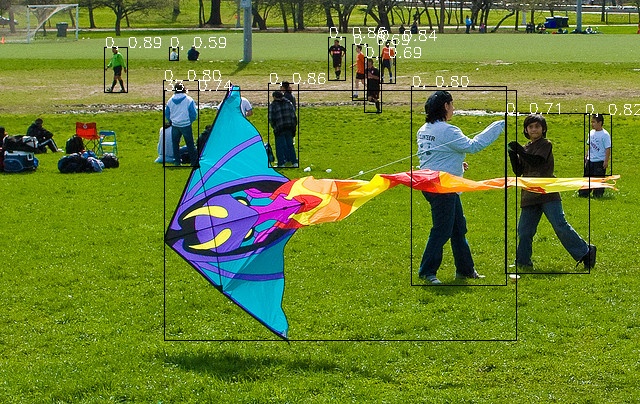

input image

输入图片

output image